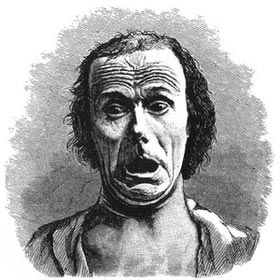

Today’s computers can perform countless calculations, but they do so in a cold and unfeeling manner. However, computer engineers are changing that as they strive to imbue machines with human-like characteristics, making human-computer interaction more friendly and natural.

By combining audio and visual data, Yongjin Wang from the University of Toronto and Ling Guan from Ryerson University, Toronto, have developed a system capable of recognizing six different human emotional states: happiness, sadness, anger, fear, surprise, and disgust. Their system can identify emotions from individuals across various cultures and languages with an 82% success rate.

Wang stated: “Human-centered computing focuses on understanding people, including facial expression recognition, emotions, gestures, speech, body movements, etc. The emotion recognition system helps computers understand the emotional state of users, allowing them to respond based on that understanding.”

The researchers’ system extracts a large number of vocal features, such as “prosodic features.”

According to Wang and Guan, emotional expression is highly diverse: vocal characteristics and facial expressions can play significant roles in conveying certain emotions, while being less relevant for others. A common example is that happiness is better recognized through certain visual cues (smiling), whereas anger is often identified through auditory cues (shouting). The researchers found that no single feature is crucial for all six types of emotions. This finding indicates that there are no clear boundaries between different emotional states, complicating the task of distinguishing these emotions for computers.

|

|

Researchers are developing human-centered computer systems capable of recognizing characteristics such as emotional states and age of individuals. (Photo: Guillaume Duchenne) |

To address these challenges, Wang and Guan employed a step-by-step method. This approach adds one feature at a time and then gradually removes them to find relevant characteristics for recognizing emotional states. They then utilized a multi-class classification system as a “divide and conquer” strategy to separate possible emotions based on the coordination of facial and vocal characteristics.

“The hardest part of helping computers detect human emotions is the vast differences and diversity of vocal and facial expressions influenced by factors such as language, culture, and individual personality. Furthermore, according to our research, there is no distinct separation between different emotions. Accurately identifying different patterns is a challenging issue.”

The researchers believe that someday, computers capable of recognizing human emotions could be applied in customer service, video games, security monitoring, and educational software.

Age Estimation

While most people are quite skilled at distinguishing whether someone is sad or happy, determining another person’s age just by looking at them presents a much greater challenge, at least for humans. Computer engineers Yun Fu and Thomas Huang from the University of Illinois have created a system that estimates a person’s age based on their facial features.

“Age is one of the most important characteristics inferred from personal circumstances, biometric information, and social context. Human-centered computer systems can gather more information about individuals in social groups than standard computer systems by focusing on how people organize and improve their lives around computer technology.”

Fu and Huang’s research is based on facial image data from over 1,600 subjects, half of whom were women and the other half men. Using results from a previous age estimation system as well as the age characteristics they identified, the researchers trained a computer system capable of estimating ages from 0 to 93 years. Their most successful algorithm can guess a person’s age—based on just a few images—within five years or less 50% of the time, and within ten years or less 83% of the time.

The researchers predict that age recognition technology could be applied to prevent children from accessing adult websites, stop vending machines from selling alcohol to underage individuals, and identify the age group of those who spend more time viewing certain advertisements.

By integrating human factors into computers, researchers continue to bridge the gap between technology and humanity. Undoubtedly, these technologies will have numerous applications in the future.