Self-driving cars are an inevitable trend in modern automobiles. Within the next 10 years, self-driving vehicles will be “everywhere” on the streets. A pressing question regarding self-driving cars is: if accidents are unavoidable, how will these vehicles decide whom to hit and whom to avoid?

According to the technology website TechCrunch, scientist Iyad Rahwan and his colleagues at the Massachusetts Institute of Technology are conducting research on the ethical implications of self-driving cars. Rahwan states: “Every time a self-driving car performs a complex maneuver, it must also consider the potential risks to various individuals.”

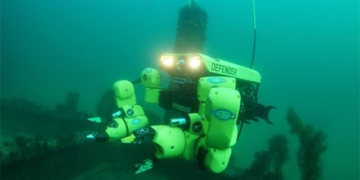

Rahwan has posed a hypothetical scenario involving an unavoidable accident with a self-driving car. A person suddenly falls in front of a rapidly moving self-driving vehicle, while at the same time, there is a concrete barrier to the left front of the car. In this situation, how will the self-driving car react? Will it swerve to avoid the fallen person and crash into the concrete barrier, resulting in the driver’s death, or will it continue straight to save the driver but put the fallen person at risk?

How will the self-driving car handle this situation?

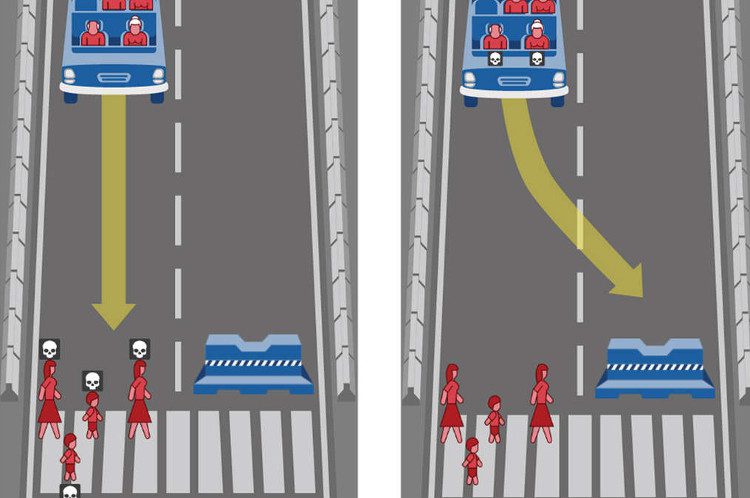

Rahwan asserts that the scenario of a self-driving car causing harm to humans is currently hypothetical, but this could very well occur in the future as streets become crowded with autonomous vehicles. Consequently, questions about the ethical dimensions of self-driving cars are being raised daily. For instance, how will a self-driving car react when overtaking a cyclist or a pedestrian?

Ryan Jenkins, a Philosophy Professor at California Polytechnic State University, remarks: “When you drive on the street, you put those around you at risk. When we pass a cyclist or a pedestrian, we often create a safe distance from them. Even if we are confident that our car won’t hit them, unexpected events can occur. A cyclist may fall, or a pedestrian may trip.”

To ensure safety, self-driving cars will need to slow down and drive cautiously when they detect pedestrians nearby, to avoid the scenario where a pedestrian intentionally steps in front of the vehicle. This is the opinion of scientist Noah Goodall from the Virginia Transportation Research Council.

While human drivers can handle such situations through intuition, this is not simple for artificial intelligence. Self-driving software needs to clearly define rules for handling unexpected situations or rely on general driving regulations. It is hoped that lawmakers will soon draft guidelines for self-driving vehicles.

To ensure safety, self-driving cars will need to slow down and drive cautiously when detecting pedestrians.

Are Manufacturers Ready?

How do manufacturers of self-driving cars address these ethical concerns? In many cases, they remain silent. Although the ethical issues surrounding self-driving cars attract significant attention, the automotive industry is attempting to sidestep these discussions. An Executive Officer from Daimler AG, when questioned, stated that their self-driving Mercedes-Benz models would protect humans at all costs. He asserted: “No software or self-driving system deliberates over the value of human life.” A representative from Daimler confirmed that the ethical aspect of self-driving vehicles is not a real issue, and the company “is focusing on risk mitigation solutions for cars to avoid getting into dilemmas.”

Nonetheless, scientists continue to assert that risks associated with self-driving cars are unavoidable. For example, brake failure, being hit by another vehicle, or a cyclist, pedestrian, or pet suddenly falling in front of the car. Therefore, the situation in which self-driving cars must make difficult choices is real.

While Daimler claims to value every life equally, it raises concerns that the company may not have clear rules to handle situations involving human lives.

Google’s self-driving cars will soon hit the market.

Meanwhile, Google has developed a detailed guideline for handling self-driving car accidents. In 2014, Sebastian Thrun, the founder of Google X, stated that the company’s self-driving cars would aim to hit smaller targets. “In the event of an unavoidable collision, the car will hit the smaller target.”

A 2014 patent from Google also describes a similar scenario, where a self-driving car avoids a truck in the same lane, swerves into the adjacent lane, and approaches a smaller car. It would be safer to hit the smaller vehicle.

Hitting a smaller target is an ethical decision, a choice to protect passengers by minimizing harm from the accident. However, this would shift the risk onto pedestrians or passengers of the smaller vehicle. Indeed, Professor Patrick Lin from California Polytechnic State University has noted: “The smaller targets could be a baby stroller or a child.”

In March 2016, Chris Urmson, the head of Google’s self-driving division, responded in an interview with the Los Angeles Times about a new rule: “Our cars will do their best to avoid unprotected individuals, namely cyclists and pedestrians. After that, the car will try to avoid moving objects on the road.” Compared to the rule of hitting the smaller target, this new approach by Google is more practical. Google’s cars will steer away to protect those most likely to be affected in an accident. Of course, this may not benefit the vehicle owner, as they would want the car to protect their life at all costs.

Should Self-Driving Cars Distinguish Between Gender, Age, and Social Status of Pedestrians?

Another issue raised is whether self-driving cars need to differentiate between the gender, age, and social status of pedestrians to make appropriate decisions. For example, considering two groups of pedestrians, one consisting of doctors and pregnant individuals, and another consisting of the elderly and young adults. In an unavoidable situation, which group would the self-driving car choose to avoid? It may take a long time before self-driving cars can differentiate between such groups.

In the long run, the most ethical decision regarding self-driving cars lies in the nature of this vehicle.

More Workshops Needed

How will we address the ethical issues of self-driving cars? There is a consensus that more discussions are needed among scientists, legal experts, and automotive manufacturers on this issue.

Last September, the National Highway Traffic Safety Administration (NHTSA) released a report stating: “Automakers, along with other entities, must collaborate with law enforcement and stakeholders (such as drivers, passengers, etc.) to address potential risk scenarios, ensuring that decisions are made purposefully.”

Wayne Simpson, an expert working for a consumer protection agency, also agrees with the NHTSA. Simpson argues: “The public has the right to know whom a self-driving car will prioritize on the street: the passenger, the driver, or the pedestrian? What factors does it consider? If these questions are not adequately answered, manufacturers will program self-driving cars according to their own regulations. These regulations may not align with societal norms, ethics, or legal standards.”

Some technology companies seem to have taken this feedback into account. Apple has stated that it is conducting “thorough surveys” to gather opinions from industry leaders, consumers, federal agencies, and experts. Ford has stated that it “is collaborating with several major universities and industry partners” in the development of autonomous vehicles. At the same time, Ford mentioned that the scenarios posed above are somewhat… exaggerated. Ford approaches the ethical issues of self-driving cars from the perspective that it will create better models rather than relying on unrealistic hypotheses that cannot be effectively resolved.

Tesla equips all its vehicle models with a self-driving system.

In the long run, the most ethical decision regarding self-driving cars lies in the very nature of these vehicles. Self-driving cars are significantly safer than human drivers. Experts predict that self-driving technology could eliminate up to 90% of traffic accidents.

However, to achieve this, we need to establish robust laws that prevent mistakes that could lead to disputes or litigation. As Rahwan and his colleagues Azim Shariff and Francois Bonnefon wrote in The New York Times: “The sooner self-driving cars are approved, the more lives will be saved. But we also need to carefully consider both the psychological and technical aspects of self-driving vehicles. This technology will free us from the monotonous, time-wasting, and dangerous task of driving that we have been doing for over a century.”