Generative AI is developing at an astonishing pace, blurring the lines between reality and imagination. Just a few days ago, OpenAI introduced a new AI model named Sora, which helps create short videos from text. Now, Google’s response comes in the form of a research paper on their AI model named Genie – capable of generating 2D video games from text prompts and a single image.

However, Genie is still in development and has not yet been released to the market.

Developed by the Open-Endedness team at Google DeepMind, this groundbreaking research project promises significant potential for the future of entertainment, game development, and even robotics. Google states that Genie is an “interactive world model” trained on a massive dataset of 200,000 hours of unlabeled video, primarily consisting of 2D platform games found on the internet.

Unlike traditional AI models that often require detailed instructions and labeled data, Genie learns by observing the actions and interactions within these videos, allowing it to create 2D games from a simple text description or a single image.

It may seem like magic, but as explained in the research paper on Genie by Google DeepMind, the internal workings are relatively complex:

Genie consists of three core components:

Video Tokenization: Imagine Genie as a skilled chef preparing a complex dish. Just as a chef breaks down ingredients into smaller parts for easier handling, the Video Tokenization process processes the massive video data into compact units known as “tokens.” These tokens serve as the basic building blocks that help Genie understand the visual world.

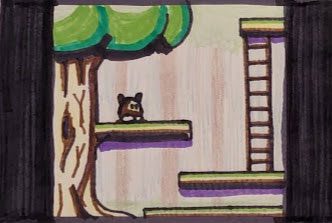

With just a single static image, the Genie AI can create a simple 2D game title as shown above.

Latent Action Model: In the second step, after “breaking down” the video data into tokens, the Latent Action Model takes over the next process. Like an experienced culinary expert, it meticulously analyzes the transitions between consecutive frames in the video. This analysis enables it to identify eight fundamental actions – the essential “seasonings” of Genie. These actions can include jumping, running, and interacting with objects in the game environment.

However, the image quality remains quite crude, and the game content is still relatively simple.

Dynamics Model: Finally, we have the Dynamics Model – the process that combines everything together. Similar to how a chef predicts how flavors will interact based on the selected ingredients, this model predicts the next frame in the video sequence. It considers the current state of the game world, including the player’s actions, and generates the next visual outcome accordingly. This continuous prediction process ultimately creates an interactive and engaging gaming experience.

Nevertheless, Genie is still in development and has many limitations. For instance, the display quality is quite poor, with a frame rate currently capped at 1 FPS, affecting visual fidelity.

Even so, the potential of Genie raises questions about the future of jobs related to game development, especially for lower-level roles in the game development process. A similar trend is occurring in the film industry; recently, a Hollywood billionaire mentioned using AI to edit his face in movies instead of relying on makeup artists for character transformations.