The human brain is vastly different from the semiconductor chips found in every computer processor. Simply put, the brain’s energy source comes from the blood supply, while processors rely on electrical power. However, this difference hasn’t stopped scientists from comparing the two. As Alan Turing stated in 1952: “We need not concern ourselves with the fact that the human brain looks like a bowl of cold porridge.” It sounds a bit unsettling, but Turing’s point was straightforward: the structure doesn’t matter; what matters is the computational ability.

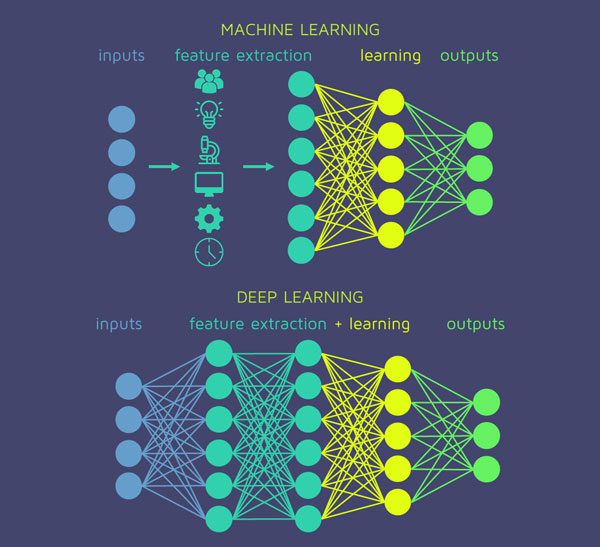

Today, the most complex artificial intelligence systems utilize a deep learning technology known as machine learning. These algorithms are implemented by analyzing vast amounts of data through densely connected layers known as deep neural networks. Just like the name suggests, current scientists and researchers simulate how the human brain functions and learns daily to create artificial intelligence. At least, that’s what neuroscientists have understood about neurons since the 1950s when the perceptron model was born. However, since then, human understanding of the computational complexity of a single neuron has expanded significantly.

In fact, a neuron in the human brain is far more complex than a node in deep neural networks of deep learning systems. But how complex is it?

To answer this question, three scientists, David Beniaguev, Idan Segev, and Michael London from the Hebrew University of Jerusalem, Israel, conducted simulations to train an artificial deep neural system that mimics how a biological neuron operates. The results showed that to simulate the complexity of a neuron, the machine must consist of 5 to 8 layers of interconnected “artificial neurons.”

The authors of this research did not anticipate such complexity. Beniaguev initially believed that only 3 to 4 layers of neural networks would be sufficient to capture the computational power of a single neuron.

Timothy Lillicrap from DeepMind, Google’s AI research unit, believes this new discovery could change the way we think about how a neuron operates in the context of artificial intelligence research. The simplest distinction between artificial intelligence and human intelligence is how they process incoming information. Both types of neurons receive input signals and use that data to determine whether to send signals to other neurons. For artificial intelligence, a single calculation can lead to a conclusion, but for biological neurons, the process is much more complex.

Neuroscientists have used input-output functions to model the correlation between incoming data received by a biological neuron through long “roots” called dendrites, and the decision to send that information to other neurons.

This computational function is a fundamental key for humans to simulate the complexity of a single neuron in the brain. Researchers began by simulating the large-scale function of receiving and transferring data between two neurons located at the beginning and end of a dendrite chain in the mouse cortex. They then transferred this simulation into a deep neural network with a maximum of 256 artificial neurons per layer. They increased the number of layers in the deep neural network until the computer accurately simulated 99% of how natural neurons interact, receive, and send information signals every millisecond. They discovered that to accurately simulate how one natural neuron operates, the computer must create 5 to 8 layers of deep neural networks, each with 256 neurons, requiring at least 1,000 artificial neurons to simulate one natural neuron.

Sometimes, the numbers are only represented relatively. Michael London states: “The relationship between the number of artificial neuron layers and the complexity of the system is not clear.” It cannot be definitively stated that needing 1,000 artificial neurons to simulate one natural neuron means that the human brain operates over 1,000 times more complex than machines. There will surely come a time when a single neuron in an artificial intelligence system can simulate a neuron in the human brain, but that will require a significant amount of time and data for machines to learn effectively.

This research by the Israeli scientists also opens up many discoveries and new foundations for future AI research. Some believe that, due to these research results, neuroscientists should also focus on studying the power of a single neuron in the pyramid chain.