Many sources suggest that the reason for CEO Sam Altman’s abrupt dismissal from OpenAI was the research team’s progress towards creating Artificial General Intelligence (AGI).

OpenAI, the creator of ChatGPT, experienced a tumultuous weekend when the company’s board of directors suddenly fired CEO Sam Altman. This led to a series of internal upheavals, including multiple interim CEO replacements and a collective letter from employees demanding the board’s resignation in order to reinstate Altman, threatening to quit if their demands were not met.

Currently, OpenAI’s internal situation has somewhat stabilized with Sam Altman returning as CEO and the previous board resigning to form a new board. However, the question of why the board suddenly dismissed CEO Sam Altman remains inadequately answered.

OpenAI CEO Sam Altman, at the center of last weekend’s turmoil at the company that created ChatGPT

According to Reuters sources, before deciding to fire Sam Altman, the OpenAI board received warnings about a breakthrough that was close to creating superintelligent AI, which could pose risks to humanity. Due to concerns about Altman’s reckless behavior, the board quickly decided to dismiss him. Many believe this breakthrough refers to OpenAI’s researchers achieving a AGI (Artificial General Intelligence).

Not only are OpenAI researchers concerned, but many scholars around the world have also expressed their worries regarding the potential to create AGI through the AI research activities of companies globally.

AGI – AI Capable of Human-Like Thinking

So, what is AGI, and how does it differ from chatbots like ChatGPT, Bard, and Bing Chat, which are currently being developed by tech companies? Why are researchers so fearful of it?

AGI is not specialized in one particular area like most current AI systems.

While there isn’t a precise definition, many experts describe AGI as a system with high automation capabilities that can perform economically valuable work better than humans. AGI is not specialized in one specific area like most current AI systems. It has the ability to adapt and generalize across a variety of tasks, and it can achieve human-like skills: consciousness and intuition. According to OpenAI, AGI is considered a superintelligent AI that is beneficial to humanity.

AGI is fundamentally different from the chatbots that users commonly utilize today. Essentially, chatbots like ChatGPT, Bing Chat, and Bard operate based on large language models (LLMs). In contrast, AGI, in theory, is a type of AI that can perform intellectual tasks with consciousness and intuition like humans, while chatbots and current LLMs are merely AI trained on vast amounts of data to provide answers based on the models they learn from the data.

Experts in the AI field are expressing skepticism about achieving AGI in the near future due to the complexity and challenges involved. This skepticism stems from a lack of understanding of human-level intelligence and the difficulties in replicating it in machines. Although the concept of AGI has been popularized through science fiction, the reality is still far from the capabilities described.

Why Many Scholars Fear AGI

The continuous learning ability makes AGI smarter than humans.

Although AGI currently exists only in theory, its potential capabilities have raised significant concerns among experts and scholars in the field. As a fully autonomous system — potentially even self-aware — AGI could surpass humans in most economically valuable tasks.

Moreover, its ability to learn continuously without fatigue may cause AGI technology to become smarter than humans. This raises concerns among experts about the potential risks of this technology when we may ultimately be unable to control it.

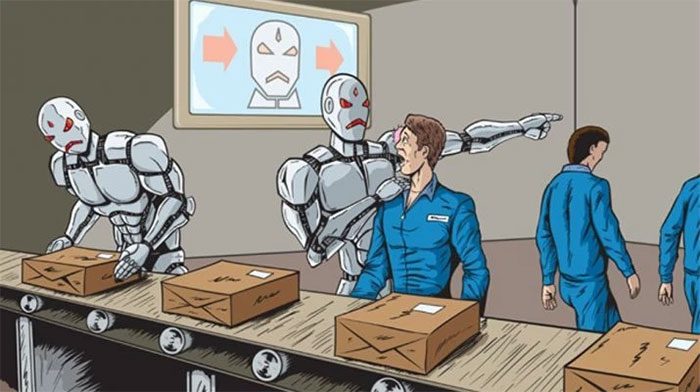

Even without superior intelligence, AGI could still have significant impacts on the labor market by replacing humans in many fields. Additionally, there are ethical concerns associated with the development of AGI.

Moreover, experts are worried that AGI could indirectly threaten humanity in another way. When combined with other technological advancements, the misuse of AGI technology could pose further threats to humanity itself.

AGI could also have significant impacts on the labor market.

These concerns have led many AI researchers and experts in the field to call for technology companies to temper the development of this advanced AI technology. However, the race to develop AI among tech companies, and even among nations, is overshadowing such calls.

Could this be the reason behind the hasty decision to fire OpenAI CEO Sam Altman? So far, it remains speculation. However, if it is indeed related to a technology developed by the company, they may need to provide an explanation for it.

While commenting on Ilya Sutskever’s apology, the chief scientist of OpenAI, after he, along with the board, overthrew Sam Altman, Elon Musk stated: “Why did he take such drastic action? If OpenAI is doing something that could pose a potential danger to humanity, the world needs to know.”