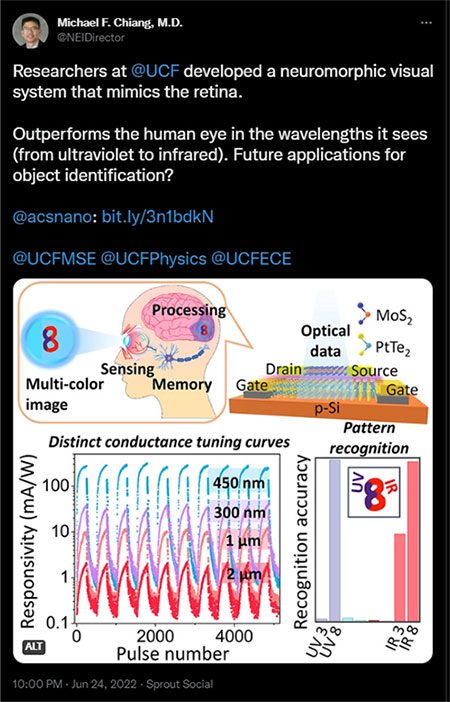

As of now, the high-tech biological eyes of Arnold Schwarzenegger in the movie “The Terminator” are no longer science fiction.

Researchers at the University of Central Florida (UCF) have developed a artificial intelligence (AI) device capable of recreating the human retina.

The researchers state that this technology features neural-like devices that function like “smart pixels” in cameras by sensing, processing, and recognizing images simultaneously. It is expected to be applicable in electronic devices and robotics within the next 5 to 10 years.

With this new technology, AI can instantly analyze and recognize what it sees, such as providing automatic descriptions of photos taken with cameras or phones. Additionally, this technology holds immense value for applications in autonomous vehicles.

This technology, detailed in a recent study published in the journal ACS Nano, technically outperforms human eyes in the range of wavelengths it can perceive, from ultraviolet to visible light and infrared spectrum.

Research could create advanced AI capable of instantly identifying what it sees, such as automatic descriptions of photos taken with cameras or phones. This technology could also be used in robots and self-driving cars.

In fact, this artificial eye was created using the foundation of existing smart imaging technology, such as that found in self-driving cars, which requires separate processing, memory, and sensing capabilities. The researchers noted that by integrating three distinct processes, the device designed by UCF has a much faster processing and recognition speed compared to existing technologies. With hundreds of compatible devices on a chip just one inch wide, this technology is also quite compact.

The principal investigator of the research team, Tania Roy, told UCF Today: “This will change the way AI recognizes today.” This technology builds upon the previous achievements of the research team, which created brain-like devices that enable AI to operate in remote areas and spaces.

Roy stated: “We have devices that function like the neural synapses of the human brain, but we do not provide direct images to them. Now, by adding imaging sensor capabilities to them, we have neural-like devices that act like ‘smart pixels’ in cameras by simultaneously sensing, processing, and recognizing images.”

This technology, described in a recent study published in the journal ACS Nano, also performs better than human eyes in the range of wavelengths it can perceive, from ultraviolet to visible light and infrared spectrum.

For autonomous vehicles, the flexibility of the device will allow for safer driving under various conditions, including at night, as the technology can “see” in wavelengths that human eyes cannot perceive, said Molla Manjurul Islam, the lead author of the study and a researcher in the Physics Department at UCF.

Islam mentioned: “If you are in your self-driving car at night and the car’s imaging system only operates at a specific wavelength. But in our case, with our device, it can actually see across all conditions.”

He added: “No reported device can operate simultaneously in the ultraviolet and visible wavelength ranges as well as in the infrared range, so this is the most unique aspect of this device.”

The key to this technology is the technique of nano-sized surfaces made of molybdenum disulfide and platinum ditelluride to enable multi-wavelength sensing and memory. This work was conducted in close collaboration with YeonWoong Jung, an assistant professor with joint appointments at UCF’s Nano Science and Technology Center and the Department of Materials Science and Engineering, part of the College of Engineering and Computer Science at UCF.

In tests of this device, researchers achieved accuracy rates between 70% and 80%, indicating that this technology could likely be integrated into hardware and operate in AI for future robots.

Typically, with older AI eyes, information in the form of images entering the camera is converted into digital form, with algorithms responsible for processing them. The input data volume is often very large (most of which is redundant data), which, when passing through a series of transformations from one form to another, results in low frame rates and a very energy-intensive data processing process.

| Previously, researchers at the Vienna Institute of Quantum Light, Austria, also created a new type of artificial eye that combines light-sensing components with a neural network, placing both on a small chip. They are capable of processing images in just a few nanoseconds – they aim to mimic the image processing of animal eyes – which can process input data before sending signals to the brain. |