Our perception of color depends on whether we view it from the left or the right. Researchers indicate that this demonstrates how language can alter the way we perceive the world.

|

|

The left hemisphere of the brain, often termed “language-friendly,” can recognize different colors more quickly when distinguishing shades of the same color. |

The idea that language can shape perception is not new. In the 1930s, American linguist Benjamin Lee Whorf proposed a controversial hypothesis suggesting that the structure of language influences human thought. Subsequent research has indirectly suggested that this might be true in some cases (for example, there are tribes that do not count because they have no names for ordinal numbers). However, whether language impacts our perception of the world remains an open question.

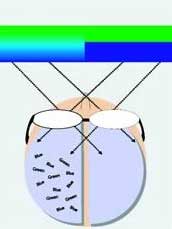

Richard Ivry from the University of California, Berkeley and colleagues hypothesize that the phenomenon in which the same visual information can create different effects on the left and right hemispheres of the brain may be relevant here. Language is primarily processed by the left hemisphere, which also processes signals from the left side of the retina in both of our eyes.

Since light from objects on our right primarily strikes the left side of the retina, researchers hypothesize that the color of objects on the right will be more strongly influenced by language. Conversely, objects on the left activate the right hemisphere, thus the influence of language will be minimal.

To test this idea, they had a group of participants observe a picture featuring green squares arranged in a circle. Then, the research team measured how long it took these individuals to identify a single square of a different color, located either on the right or left.

To test this idea, they had a group of participants observe a picture featuring green squares arranged in a circle. Then, the research team measured how long it took these individuals to identify a single square of a different color, located either on the right or left.

The eccentric square was either a dark green compared to the other squares or a light blue. If it was on the left, volunteers detected both types (dark green and light blue) in roughly the same amount of time. However, if it was on the right, participants took longer to identify the dark green square compared to the light blue one.

The researchers explained this as being due to the light blue square having a distinctly different name, allowing the left hemisphere (with its “language-friendly” attribute) to perceive the difference in color more quickly than with the dark green square.

Ivry and colleagues continued testing the hypothesis by asking participants to memorize a series of words while repeating the visual test. The result was that when the language center in the left brain became “busy,” it had less opportunity to affect visual perception, and as predicted, participants identified the light blue or dark green square on the right side of the image in the same amount of time.

“This demonstrates that the influence of the first experiment was indeed due to language,” commented Michael Corballis from the University of Auckland, New Zealand. His own experiments yielded similar results.

Ivry’s team is now investigating whether similar effects can be observed with familiar objects, such as cats or cars, beyond just color. Previous studies have shown that we indeed perceive everyday objects under different states, depending on their location and names.