The Cerebras Chip Wafer Scale Engine 3 (WSE-3) contains 4 trillion transistors and is set to power the future Condor Galaxy 3 supercomputer with a capacity of 8 exaFLOPS.

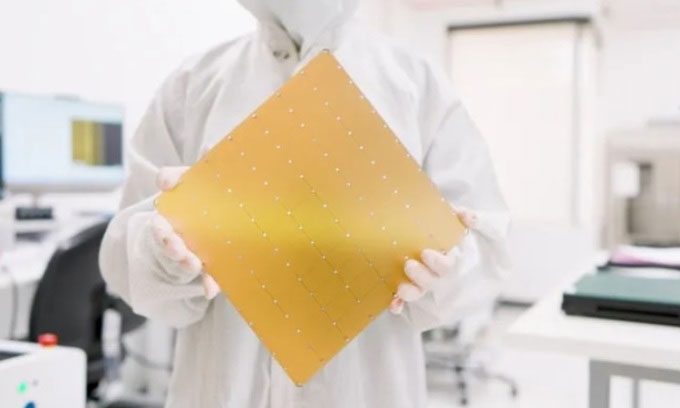

Cerebras Wafer Scale Engine 3 Chip. (Photo: Cerebras).

Scientists have developed the largest computer chip in the world, which houses 4 trillion transistors (active semiconductor components typically used as an amplifier or electronic switch), according to Live Science. In the future, this gigantic chip will be used to operate extremely powerful artificial intelligence (AI) supercomputers. The Wafer Scale Engine 3 (WSE-3) is the third-generation platform from the supercomputer company Cerebras, designed to run AI systems such as OpenAI’s GPT-4 and Anthropic’s Claude 3 Opus. The chip includes 900,000 AI cores, built from a silicon semiconductor wafer measuring 21.5 x 21.5 cm, similar to its predecessor, the WSE-2, from 2021.

The new chip uses the same amount of power as the WSE-2 but is twice as powerful. In comparison, the previous chip comprised 2.6 trillion transistors and 850,000 AI cores, meaning the number of transistors in the computer chip doubles every two years. For context, one of the most powerful chips currently used for training AI models is the Nvidia H200 graphics processing unit (GPU). However, Nvidia’s GPU contains only 80 billion transistors, which is 57 times fewer than Cerebras’s chip.

One day, the WSE-3 will be used for the Condor Galaxy 3 supercomputer, located in Dallas, Texas, according to a company representative shared on March 13. The Condor Galaxy 3 supercomputer, currently under construction, will consist of 64 base blocks, which are AI systems powered by the Cerebras CS-3, utilizing the WSE-3 chips. When interconnected and activated, the entire system will have a computational capacity of up to 8 exaFLOPS. Later, when combined with the Condor Galaxy 1 and Condor Galaxy 2 systems, the entire network will achieve a total capacity of 16 exaFLOPS (a unit of measure for the computational power of computer systems). One exaFLOP equals 1,000 petaflops, equivalent to 1 quintillion calculations per second.

Currently, the world’s most powerful supercomputer is Frontier at the Oak Ridge National Laboratory, which operates at 1 exaFLOP. The Condor Galaxy 3 supercomputer will be utilized to train future AI systems that are ten times larger than GPT-4 or Google’s Gemini.