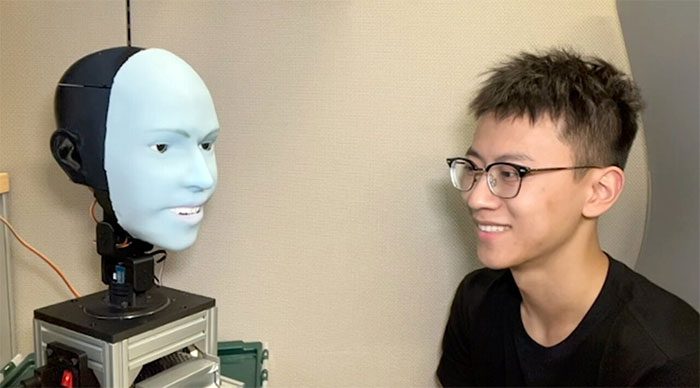

Emo Robot Can Predict a Smile About 840 Milliseconds Before the Other Person Smiles, Then Smiles Simultaneously.

Emo Robot can predict expressions and smile simultaneously with the other person. (Video: New Scientist)

Humans are gradually becoming accustomed to robots that can communicate fluently through speech, partly due to advancements in large language models like ChatGPT. However, their non-verbal communication skills, particularly facial expressions, still lag behind. Designing a robot that not only displays a wide range of expressions but also displays them at the right moments is incredibly challenging.

The Creative Machines Laboratory at the School of Engineering at Columbia University, USA, has been researching this issue for over five years. In a new study published in the journal Science Robotics, the team of experts here introduced Emo, an AI robot capable of predicting human facial expressions and executing them simultaneously. According to TechXplore on March 27, Emo can predict a smile about 840 milliseconds before the other person smiles and then smile at the same time.

Emo Robot is a significant advancement in non-verbal communication between humans and robots. (Image: popsci)

Additionally, Emo can express 6 basic emotions such as anger, disgust, fear, happiness, sadness, and surprise, as well as a range of more nuanced reactions. This capability is made possible by artificial muscles constructed from cables and motors. Emo expresses emotions by pulling artificial muscles to specific points on the face.

The research team also utilized artificial intelligence (AI) software to predict human facial expressions and create corresponding robotic facial expressions: “Emo addresses challenges by using 26 motors, soft skin, and eyes equipped with cameras. Therefore, it can perform non-verbal communication, such as eye contact and facial expressions. Emo is equipped with several AI models, including human face detection, controlling the facial actuators to mimic facial expressions, and even predicting human facial expressions. This allows Emo to interact in a timely and authentic manner.”

The research team developed two AI models. The first model predicts human facial expressions by analyzing subtle changes in the opposite face, while the second model generates movement commands based on corresponding expressions.

To train the robot to express emotions, the research team placed Emo in front of a camera and let it perform random movements. After several hours, the robot learned the relationship between facial expressions and movement commands – similar to how humans practice expressions while looking in a mirror. The research team referred to this as “self-modeling” – akin to humans imagining what they look like when performing certain expressions.

Now, robots can integrate facial expressions for responses.

Next, the research team played videos of human facial expressions for Emo to observe frame by frame. After several hours of training, Emo can predict expressions by observing subtle changes in the face when a person begins to intend to smile.

“I believe accurately predicting human facial expressions is revolutionary in the field of human-robot interaction. Previously, robots were not designed to consider human expressions during interaction. Now, robots can integrate facial expressions to respond,” said Yuhang Hu, a Ph.D. student at the Creative Machines Laboratory and a member of the research team.

“Having robots perform expressions simultaneously with humans in real-time not only enhances the quality of interaction but also helps build trust between humans and robots. In the future, when interacting with robots, it will observe and interpret your facial expressions, just like a real person,” Hu added.

The research team plans to integrate verbal communication into Emo, Yuhang Hu further noted: “Our next step involves integrating verbal communication capabilities. This will allow Emo to engage in more complex and natural conversations.”