Thanks to new error correction techniques, Google is getting closer to a commercially viable quantum computer.

The quantum state, inspiration for scenes in “Ant-Man 3,” is the mechanism behind the qubits of a quantum computer. (Image: Marvel Studios).

Months before the production of Ant-Man and the Wasp (2018) began, director Peyton Reed and the production team gathered at Marvel Studios in Burbank, California, to listen to a quantum physicist explain real scientific phenomena that inspired the film.

“The quantum realm is a place of infinite possibilities, another universe where the laws of physics and natural forces as we know them have not crystallized,” Spyridon Michalakis, a quantum physicist at the Institute for Quantum Information and Matter at Caltech, recounted to the New York Times what he explained to the film crew. These infinite possibilities are also the operational principle of quantum bits, or qubits, which can be both 0 and 1 in quantum computing.

At a laboratory in Santa Barbara, California, physicists at Google demonstrated that they could reduce the error rate of quantum computations by increasing the number of qubits. This milestone follows the famous 2019 experiment when a quantum computer from Google performed calculations that would take a conventional computer thousands of years to solve.

However, Google also acknowledges that the improvement is still modest, and the error rate needs to be reduced significantly further. “The error rate has decreased a bit; we need it to decrease a lot more,” said Hartmut Neven, who oversees the quantum computing division at Google’s headquarters in Mountain View, California.

Quantum Error Correction

Error correction is a mandatory process if we want to use quantum computers to solve problems beyond the capabilities of classical computers, such as factoring large integers into primes or detailing the behavior of chemical catalysts, Google physicists explained in Nature.

All computers have errors. A conventional computer chip stores information in bits, each bit representing either 0 or 1, and duplicates some information into backup bits. When an error occurs, based on the information in the backup bits, the chip can automatically detect and correct it.

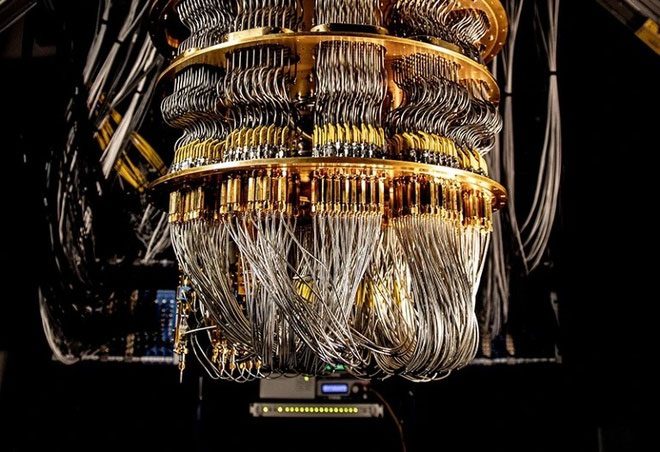

The Sycamore quantum processor introduced by Google in 2019 is considered the first milestone in the development of quantum computers. (Image: Google).

“In quantum information, this cannot be done. Quantum computers operate based on quantum states called qubits, which can exist in a mixture of 0 and 1. A qubit cannot be read without losing its quantum state, thus you cannot copy information from one qubit to a backup qubit,” said Julian Kelly, Director of Quantum Hardware at Google.

Theoreticians have proposed quantum error correction techniques to address this issue. This method allows encoding a qubit of information with a set of multiple physical qubits instead of just a single qubit. The computer can check several physical qubits in the set to assess the integrity of the informational qubit and correct errors if they exist. The more physical qubits there are, the more effectively the quantum computer can correct errors.

Google’s Progress

However, adding more physical qubits also increases the likelihood of multiple simultaneous errors. Google tested the practical efficacy of this error correction technique by implementing two versions.

The first version uses a set of 17 qubits, capable of recovering one error at a time. The second, larger version uses a set of 49 qubits and can recover two errors simultaneously. The experiments showed that the larger version had better error correction performance compared to the smaller version.

The error correction technique announced on February 22 is regarded by Google as the second milestone among six milestones towards the development of a one-million-qubit quantum computer. (Image: Google Quantum AI).

“However, the current level of improvement is very small, and it is uncertain whether using even more qubits will yield better error correction performance,” Terhal noted.

Google has established a quantum computing roadmap with six key milestones, the 2019 experiment being considered the first milestone and the new experiment the second.

The sixth milestone is a machine composed of one million physical qubits, encoding 1,000 informational qubits. “At that stage, we can confidently promise the commercial value of quantum computers,” Neven stated.