A New Study Confirms That the Brain Can Store Information Ten Times More Than We Previously Thought.

Similar to computers, the memory of the brain is measured in “bits.” This storage capacity depends on the connections between neurons, known as synapses.

Previously, scientists believed that the number and size of synapses were quite limited, resulting in a relatively small storage capacity for the brain. However, recent studies have revealed that the brain can contain up to ten times the amount of information we once thought.

In this new study, researchers developed a precise method to assess the connectivity of neurons in a specific part of the mouse brain. These synapses serve as the foundation for learning and memory, as brain cells transmit information through these junctions to store and share data.

Understanding the strengthening and weakening of synapses allows scientists to more accurately quantify the amount of information that these connections can store. The results of this research could enhance learning capabilities and provide deeper insights into aging and diseases that impair connections in the brain.

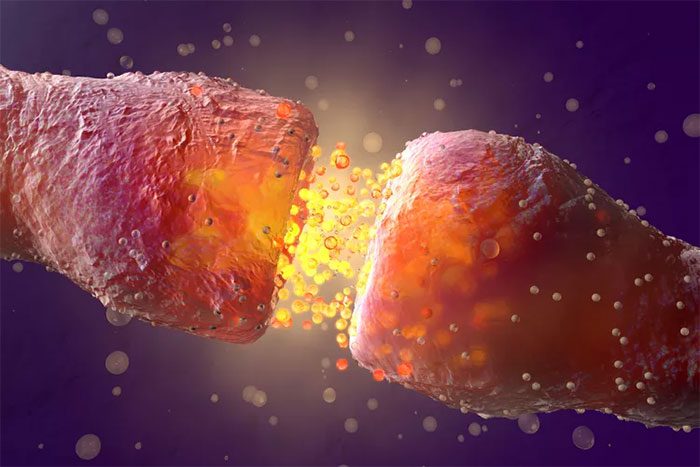

The human brain has over 100 trillion synapses between neurons. Neurotransmitters released through these synapses facilitate information transmission within the brain.

During learning, the transmission of information through synapses increases, aiding in the storage of new information. Overall, the strengthening or weakening of synapses is a response to the activity level of neurons. The more we learn or engage in activities, the more synaptic strengthening is stimulated.

Synapses promote information exchange between neurons. (Image: Westend61/Getty Images).

However, as we age or develop neurological diseases like Alzheimer’s, synapses become less active and weaken, diminishing cognitive performance and impairing the ability to store and retrieve memories.

Scientists can measure the strength of synapses through their physical characteristics, but this was not an easy task in the past. The new study has changed that.

The research team utilized information theory to measure the durability and plasticity, or the ability to transmit and store information of synapses. This method allows them to quantify the information transmitted through synapses, measured in bits. One bit corresponds to a synapse transmitting information of degree 2, and 2 bits allow for information transmission of degree 4…

The research team analyzed pairs of synapses in the hippocampus of the mouse brain, a region crucial for learning and memory formation. The analysis results showed that the synapses in the hippocampus can store between 4.1 to 4.6 bits of information.

A previous study reached a similar conclusion, but at that time, the data was processed using a less accurate method. This new research confirms what many neuroscientists believe: each synapse has the capacity to store more than 1 bit.

It is important to note that these findings are based on experiments conducted in a small region of the mouse hippocampus, and thus results cannot yet be generalized to the entire mouse brain or to the human brain.

In the future, the research team’s method could be applied to compare the storage capacities of different regions in the brain, as well as this capacity in healthy individuals and those with diseases. This opens up opportunities to determine the information storage capacities of the brains of various animal species.