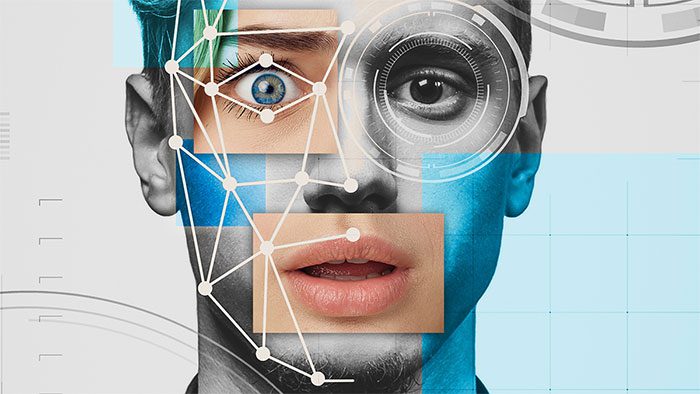

Images and deepfake videos are becoming a dangerously popular trend, increasingly appearing on the internet and media. In light of the rapid rise of this phenomenon, is there a way to detect and mitigate its harmful effects?

Summary of an article by Gavin Phillips on Makeuseof about how to detect the risks of deepfake technology.

Videos and images are part of our daily lives, existing everywhere from TVs, computers, and smartphones to online platforms like YouTube and Facebook. However, a technology known as deepfake has emerged with the ability to “distort the truth”, causing the world to lose trust in the videos and images they see. What should you do if one day you or a loved one becomes a victim of deepfake?

Today, the Internet has brought humanity closer together, allowing you to easily access information, images, and videos of almost anyone with just a few clicks on social media. Alongside the amazing capabilities that the Internet provides, there are also numerous security risks. Hacking incidents involving the theft of users’ personal data have become all too familiar, with a recent event involving over half a million Zoom user credentials being leaked on the dark web.

Especially, the rise of deepfake in recent years has complicated the issues surrounding online images and videos more than ever. The situation is even more serious as this technology is no longer difficult to use and is becoming increasingly affordable.

The rise of deepfake complicates issues related to online images and videos.

What is Deepfake?

Deepfake is a technology that allows users to edit the faces and lip movements of characters in videos or images using AI and some open-source algorithms.

If deepfake is used for harmless purposes, such as playing pranks on friends or family, it is completely benign. However, we are discussing the worst-case scenarios where deepfake has been misused as a tool for malicious actions, such as creating pornographic videos of celebrities, revenge porn, and fake news.

This term is a combination of “deep learning” and “fake”. It is called this because these two elements play a crucial role in how deepfake operates. While the term “fake” clearly indicates that the goal of deepfake is to create images that are counterfeit and different from the original, “deep learning” is more complex. It pertains to the “learning” process of artificial intelligence.

The term Deepfake is a combination of “deep learning” and “fake”.

Specifically, every product using artificial intelligence goes through two main steps: inputting data and then building a model and selecting an algorithm to continuously process and learn from those models. Deepfake is no exception; its input data consists of videos and images, with open-source algorithms like TensorFlow or Keras readily available online. After a learning period, the AI behind deepfake can easily perform face-swapping tasks with a high degree of similarity.

The Dangers of Deepfake

On a daily basis, we encounter countless images and videos when accessing the internet, especially on social media sites. I believe that most of these photos or videos have been edited, as in the current era, platforms like Facebook and Instagram serve as a reflection of each person, leading almost everyone to want their posts to look appealing. Thanks to the widespread availability of image editing tools, from basic smartphone apps to advanced options like Photoshop, users can easily adjust images and videos to their liking, with some edited products being so realistic that they are unrecognizable.

However, deepfake exists at an entirely different level, and it is no exaggeration to call it a master in creating counterfeit images and videos.

One of the most shocking deepfake videos to viewers worldwide is a fabricated video featuring the voice and image of former President Obama. In this video, the person sitting in front of the camera looks just like Obama but speaks in a different voice, particularly at the end of the video where he recites lines from a monologue by the famous American actor and director, Jordan Peele.

The deepfake video of Obama’s image and voice.

Although this video is not harmful, it raises fears about the consequences that deepfake technology and AI may bring. The initial barriers to using deepfake were quite high, but now it is no longer an advanced science since data and algorithms are readily available online. Furthermore, the process of learning and processing does not require supercomputers; instead, a regular PC with a standard graphics card can easily perform this process in just a few hours.

Pornographic Films Featuring Celebrity Faces

Exploiting the capabilities of deepfake, malicious individuals have used it for personal gain by creating numerous pornographic films by swapping the faces of celebrities like Emma Watson, Taylor Swift, and Scarlett Johansson. Each “adult” film follows a familiar script, but with only the faces of the actors changed, attracting tens of millions of views on adult film websites after being uploaded. Yet, no website has taken action to remove or repost the original videos.

The Marvel beauty is one of the victims of deepfake.

Giorgio Patrini, CEO and Research Director at Sensity, a company specializing in deepfake analysis and detection, stated: “Celebrity pornographic videos will continue to exist unless there is a legitimate reason or legal obligation for the hosting sites. Consequently, these videos continue to be uploaded without facing any barriers.”

Harming Women through Pornographic Films and Images

Not only celebrities but also ordinary women have become victims of pornographic films. A study by Sensity revealed that deepfake contains a bot operating on the Telegram social messaging app, which has created over 100,000 nude images using deepfake technology. The majority of these stolen images primarily originate from social networks.

The bot is a perfect complement to deepfake technology, allowing users with no knowledge of deepfake to easily utilize it. Users simply need to upload an image, and the bot will automatically handle the rest. However, studies show that deepfake bots seem to activate only with images of women or users who subscribe to premium deepfake packages (which process more images and enhance blurry images).

The bot is a perfect addition to deepfake technology.

The use of deepfake bots has sparked a strong backlash, with people condemning the act of stealing images from Telegram, calling it exploitative, abusive, and inhumane. What will happen to the lives of these women when such images are disseminated online? How will they face family, friends, and colleagues? Furthermore, perpetrators could exploit these leaked images to blackmail and extort the victim’s family, or worse, they could become victims in pornographic videos.

There remains an unanswered question regarding why a bot, which performs simple, repetitive tasks, can bypass the robust systems of Telegram. This is considered almost impossible, as the messaging service is very secure with high-level protection and the ability to prevent pornographic bots from infiltrating the system.

“Playing Tricks” on the World

Since the deepfake video featuring the likeness and voice of Obama was uploaded, the world has grown increasingly concerned about the potential for similar scenarios to occur, but in a more negative context. The United States is one of the leading global powers, so the words of its president carry significant weight. If deepfake criminals use images and words of Obama to spread messages of war or rebellion, it would undoubtedly be a tragedy. The situation could even escalate further, with the dangers of deepfake in this scenario being extremely high.

The deepfake video event featuring Mr. Obama posted in 2018 made many people fear deepfake technology.

Imagine a deepfake video that fakes content related to a major corporation or a bank executive claiming that the stock market has collapsed. With the internet, news would undoubtedly spread globally in an instant, causing many people to rush to sell off their stocks. Any effort to stop or remove this video immediately would be extremely difficult as it is publicly posted online. This is just one of the many dire scenarios that deepfake technology poses.

Moreover, as deepfake technology becomes cheaper and easier to use, it leads to a massive increase in the creation of fake videos and images. This makes the messages conveyed not only more complex but also more unpredictable, with videos discussing the same person or content being crafted in different tones, locations, and styles.

Distorting the Truth

When reviewing deepfake videos and images, we can see how sophisticated this technology is in its ability to fabricate content. Even when warned that a video or image is a deepfake, many viewers remain skeptical and do not believe that it has been altered. To be fair, I think most of us would react this way if there wasn’t a label stating “this is deepfake data,” simply because deepfake images or videos can look incredibly realistic.

The ability of deepfake to “distort the truth” has both positive and negative implications. Alongside the harms it causes, if used correctly, deepfake technology can be beneficial in various situations. For instance, if a criminal is caught, and there is a video clearly recording their actions, but they claim, “this is fake evidence, the video is created using deepfake,” we can create deepfake videos to generate additional evidence that they cannot deny.

The ability to fabricate with deepfake is extremely sophisticated.

Leadership and Ideological Manipulation

There have been several documented cases of deepfake being used as a tool to create data with misleading content, as well as to lead public opinion by impersonating high-ranking officials. According to information revealed by profiles on LinkedIn and Twitter, such videos serve to coordinate activities in strategic government organizations, where the high-ranking figures do not exist but are created using deepfake technology.

However, credible sources indicate that such impersonation is indeed applied within government networks and national intelligence agencies to gather information and carry out covert political activities without revealing identities. Nonetheless, the risk of deepfake infiltrating and compromising these videos remains unpredictable, and we must prevent it from the outset.

The risk of deepfake infiltrating and compromising videos remains unpredictable.

The Erosion of Trust Among People

In society, communication is essential and occurs frequently on a daily basis. People build relationships based on trust and their inherent honesty in speech.

This is a vulnerability that bad actors can exploit through deepfake technology, as they can easily impersonate someone’s voice to exploit the trust of their loved ones, friends, and colleagues, leading to numerous malicious acts such as stealing personal images and videos, sexual harassment, and fracturing families. Such inhumane actions have occurred and have driven many people to seek escape through death.

How to Detect and Prevent Deepfake

The increasing sophistication and refinement of deepfake videos and images has made it very difficult to identify them. In the early days of this technology, there were noticeable weaknesses such as blurry images, voice errors, and glitches, but now deepfake has evolved beyond that.

Although there is no specific method to accurately detect deepfakes, I believe that by paying attention to the following four indicators, you may still be able to recognize deepfake videos and images.

- Small Details: Despite significant advancements in deepfake editing technology, it is still not perfect. Particularly concerning effects related to small details in videos, such as hair movement, eye movements, cheek structure, and facial expressions while speaking. All these aspects have not yet reached a certain level of refinement and naturalness, especially eye movements. Even though deepfake has had tools to adjust this since its inception, it remains a significant gap in this technology.

Microsoft Video Authenticator is a deepfake detection tool developed by Microsoft.

- Emotion: Another weakness of deepfake videos is the emotion displayed on the characters’ faces. This technology can only simulate expressions such as anger, happiness, and joy to a moderate extent, and it still retains the “stiffness” of a machine. Experts say that deepfake still has a long way to go to achieve a level of realism comparable to that of an ordinary person.

- Video Quality: In the era of 4.0 digital technology, people can record and view videos in high resolutions such as 4K, HDR 10bit, etc. If a political leader wishes to make a statement via video or live stream, it is certain that the process will be filmed in a room equipped with the best technology. Therefore, if such statements encounter transmission errors, poor audio, or low video quality, it is highly likely that deepfake technology has been involved.

- Source of Publication: The final factor to consider is the source of the deepfake video. Although popular social media platforms have features to verify user identities to prevent information theft, anything can happen, and systems can still encounter issues. Therefore, when these platforms fail to prevent the dissemination of videos containing harmful content, it is a sign of deepfake.

Tools for Detecting and Preventing Deepfake

Currently, many large technology corporations have joined the battle against deepfake, leading to the development of various detection tools and platforms aimed at permanently blocking deepfake content.

A notable example is Microsoft Video Authenticator, a deepfake detection tool developed by Microsoft that can verify the authenticity of video data in just a few seconds. Social media platforms have implemented features to completely block all images and videos altered through deepfake. Additionally, Google is also experimenting with an application to combat audio impersonation.