CEO Tim Cook Acknowledges Apple Intelligence Can’t Completely Avoid “Hallucinations” – The Phenomenon of Providing False, Non-existent Information.

Announced at the WWDC 2024, Apple Intelligence is a suite of artificial intelligence (AI) features on iPhones, iPads, and Mac computers, capable of generating content, images, and summarizing text.

Similar to other AI systems, many have raised concerns about hallucinations with Apple Intelligence. Based on statements from CEO Tim Cook, Apple’s AI has not yet completely eliminated this issue.

CEO Tim Cook during an interview right after the WWDC 2024 event. (Photo: Marques Brownlee/YouTube).

In an interview with The Washington Post, Cook admitted he had “never claimed” that Apple Intelligence does not produce misleading or false information.

“I think we have done everything necessary, including carefully considering the readiness to apply technology across various fields. Therefore, I am confident that (Apple Intelligence) will have very high quality. But I must be honest that the accuracy may not reach 100%. I have never claimed this,” Cook emphasized.

What is AI Hallucination?

According to IBM, hallucination is a phenomenon where large language models (LLMs) – often chatbots or computer vision tools – generate data patterns that do not exist or are unrecognizable to humans, resulting in nonsensical or misleading outcomes.

In other words, users often request AI to produce accurate results based on the trained data. However, in some cases, the AI’s results do not rely on accurate data, creating “hallucinations.”

ChatGPT integrated with Siri since iOS 18. (Photo: The Verge).

Hallucinations are often associated with the human or animal brain rather than machines. However, this concept is also used with AI as it accurately describes how models generate results. It is similar to how humans sometimes see strange objects in the sky, hear sounds from unclear sources, or feel something touch their body.

The phenomenon of hallucination occurs due to various factors such as overfitting, biased training data, and the complexity of the model. AIs from Google, Microsoft, and Meta have faced controversies due to this phenomenon.

According to The New York Times, Google’s Bard chatbot once claimed that the James Webb Space Telescope was the first to capture images of an exoplanet. In reality, the first image of an exoplanet was captured in 2004, while the James Webb was launched in 2021.

Days later, Microsoft’s Bing tool incorrectly reported information about singer Billie Eilish. Following that, ChatGPT fabricated a series of nonexistent cases while assisting a lawyer in writing legal summaries submitted to the court.

Why Does AI Hallucinate?

Chatbots like ChatGPT rely on LLMs, with training data sourced from extensive repositories like news sites, books, Wikipedia, and chat histories. By analyzing observed patterns, the model generates results by predicting words based on probability rather than accuracy.

“When you see the word ‘cat,’ you immediately think of experiences and things related to cats. For a language model, it’s just the string of characters ‘cat.’ Therefore, the model can still pull information about words and character strings that appear alongside,” explained Emily Bender, Director of the Computational Linguistics Lab at the University of Washington.

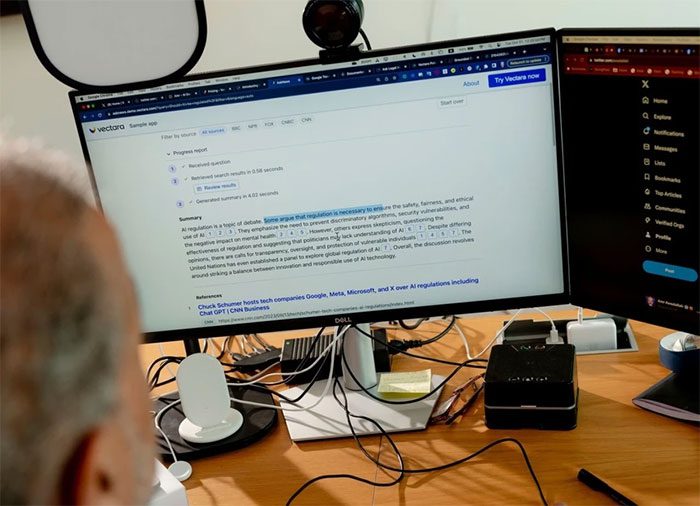

Given that the Internet contains much misleading information, chatbots can easily make mistakes. In November 2023, Vectara, a startup established by former Google employees, released research on the frequency of misinformation from AI.

A summarization tool from Vectara. (Photo: The New York Times).

In the study, Hughes and his team asked the chatbot to summarize an article, a basic task that is easily verifiable. Even with this task, AI continued to generate information autonomously.

Accordingly, even in preventative scenarios, the chatbot still has a 3% chance of fabricating information, with a maximum of 27%.

For example, the research team asked Google’s PaLM to summarize an article about a man arrested for growing a “complex plant” in a warehouse. In the summary, the model incorrectly fabricated that it was cannabis.

“We provided 10-20 verified news items to the systems and asked for summaries. The fundamental issue is that they can still generate errors,” said Amr Awadallah, CEO of Vectara and former Vice President of Google Cloud.

Hallucination Rates of ChatGPT and Apple Intelligence

In a related article concerning the Fundamental Model of Apple, the company mentioned testing for generating “harmful content, sensitive topics, and misinformation.” As a result, the violation rate of Apple Intelligence when running on servers was 6.6% of total requests, which is relatively low compared to other models.

Since chatbots can respond to almost any information in an unrestricted manner, researchers at Vectara believe it is very challenging to accurately determine hallucination rates.

“You would have to check all the information in the world,” stated Dr. Simon Hughes, a researcher and project lead at Vectara.

Some features of Apple Intelligence. (Photo: Apple).

According to the research team, the hallucination rates of chatbots are higher with other tasks. At the time of releasing the research, OpenAI’s technology had the lowest hallucination rate (around 3%), followed by Meta (around 5%), Anthropic’s Claude 2 (8%), and Google’s PaLM (27%).

“Making our systems useful, truthful, and harmless, including avoiding the phenomenon of hallucination, is one of the core goals of the company,” said Sally Aldous, a spokesperson for Anthropic.

With this research, Hughes and his colleagues aim for users to enhance their vigilance regarding information coming from chatbots, including services for both general users and businesses.