Most advanced driver assistance systems (ADAS) today utilize a combination of radar and sonar to provide warnings about unseen threats and help stop vehicles before collisions occur. Lidar is a technology that can perform similar functions to radar and sonar, but it is a next-generation system that may be the best choice for the “vision” capabilities of autonomous vehicles (AV).

As automotive manufacturers and other companies shift towards real-world testing and driving, it is clear that next-generation sensors and technologies provide compelling functions.

What is Lidar?

Lidar is a technology of ‘light detection and ranging’. Systems use laser beams to map three-dimensional models of the environment. The use of light in Lidar allows for faster and more accurate mapping of the environment compared to systems that use sound (sonar) or microwaves (radar). Lidar was developed by NASA to track satellites and distances in space but was adopted for use in other industries by the mid-1990s when the U.S. Geological Survey used Lidar to monitor coastal vegetation growth.

Since then, the technology has evolved, and Lidar systems have become smaller and even more precise. This has made Lidar an attractive option to provide “eyes” for self-driving vehicles, as these vehicles need to quickly develop images of their surrounding environment to avoid collisions with pedestrians, animals, obstacles, and other vehicles.

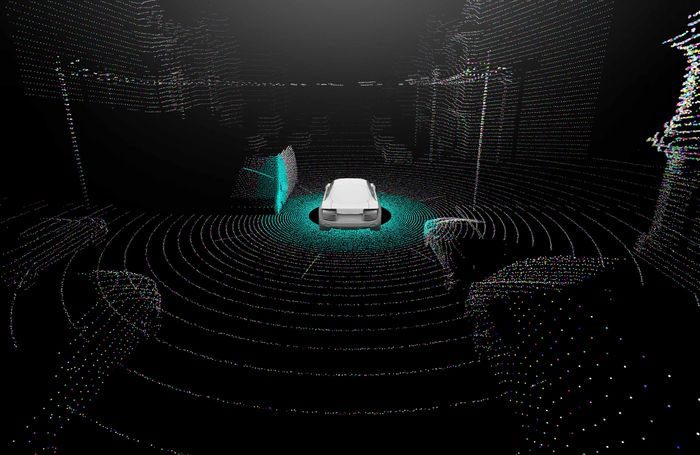

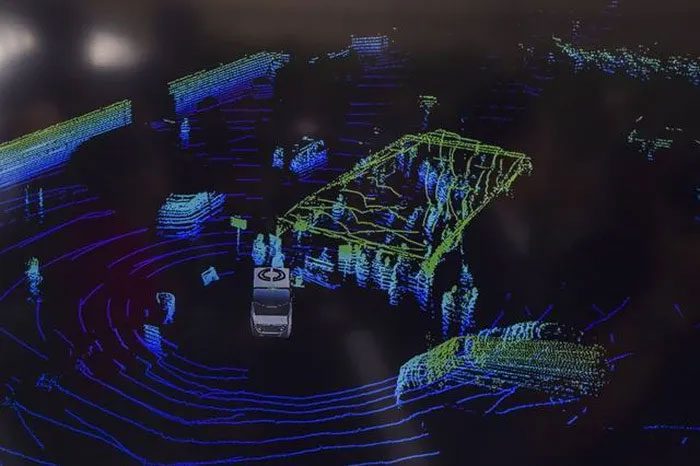

Lidar systems map their environment by sending laser pulses outward. When a pulse encounters an object or obstacle, it reflects back to the sensor. The system then receives the pulse and calculates the distance between itself and the object based on the time elapsed between sending the pulse and receiving the reflected beam.

Lidar does this rapidly, with some systems emitting millions of pulses per second. As the beams return to the system, it begins to form a picture of what is happening in the world around the vehicle and can use computer algorithms to identify shapes of cars, people, and other obstacles.

How is Lidar Used?

Radar has been used in the automotive world for many years and has been integrated into various forms of advanced driver assistance systems (ADAS). Blind-spot monitoring systems use radar to detect vehicles before a lane change, adaptive cruise control uses radar to maintain a consistent distance between two vehicles on the road, and automatic emergency braking systems use radar to stop the vehicle before contact with an obstacle.

Lidar promises to enhance these features by providing more accurate environmental mapping and faster processing due to the rapid nature of the system. With its 360-degree capability, Lidar will improve the accuracy and quality of safety alerts.

How Lidar Works with AV

First, it is important to note that currently, fully autonomous vehicles are not available for sale to consumers. Vehicles like those from Tesla or Cadillac equipped with Super Cruise offer hands-free driving for extended periods, but only do so in very limited scenarios, such as on federal highways.

As self-driving vehicles eventually enter the mainstream, the amount of data required and the speed of data collection is staggering. To integrate a decision-making process anywhere near the complexity that the human brain can manage, autonomous vehicles need an accurate and real-time picture of their surroundings. This is particularly true in urban environments, where drivers encounter other people, animals, and various types of vehicles within a short timeframe.

Drawbacks of Lidar

Lidar is considered the standard for many companies working on autonomous vehicles, but this technology is not fully embraced by all automakers. Tesla and its founder Elon Musk have criticized Lidar as a driving force behind AV perception, arguing that the technology merely recreates an image of the surrounding environment rather than providing a representation of what is actually occurring.

One example of this issue is with small obstacles on the road. Lidar is certainly capable of identifying that there is something on the road that needs to be avoided, but it cannot precisely determine what it is seeing. For Lidar, a balloon floating in the road looks just like a large rock, leading to situations where a non-threat is treated as a threat, and a real threat might not be recognized as such. In a vacuum, this is not a significant issue, but in the real world, it is not ideal for a vehicle to misinterpret what it is seeing.

Tesla argues, as do others, that using vision-based systems with cameras can achieve similar perception to that provided by Lidar, but with the added safety of visualizing the actual environment. Tesla’s systems use cameras and learn over time, which helps them better handle unpredictable environments. This functionality, combined with the fact that cameras are currently much cheaper than Lidar, has led some to question the necessity of expensive sensors.

The answer to which sensor or camera would be best for autonomous vehicles is more complex than determining whether a vehicle can “see” or not. Testing to date has primarily been conducted in somewhat controlled and limited environments, which do not fully represent the conditions that AVs might encounter on a daily basis.