The AI model named Mind-Vis, trained on a large dataset with over 160,000 brain scans, has been able to recreate up to 84% of what a person is thinking.

Just a few years ago, images from technology simulating human brain activity were not yet perfect. However, a team of researchers from Stanford University, the National University of Singapore, and the Chinese University of Hong Kong (CUHK) is getting closer to achieving this feat.

Specifically, in an experiment, the research team collected data on the brain activity of participants while they were shown a series of images. On the left are the images displayed after scanning the brain of the volunteers. Meanwhile, on the right are the images generated by the AI.

Next, the scientists used data from functional magnetic resonance imaging (fMRI) to observe the brain activity of the participants in more detail. The researchers then sent all of this signal through an AI model to train the computer to link brainwave patterns with certain images.

The results obtained from how the AI simulates images from brainwaves are astonishing. Specifically, the image of a house and a driving road leads to a blend of colors creating images of a bedroom and a living room.

Meanwhile, the image of a finely crafted stone tower from a volunteer was generated by the AI to create a similar tower, but with windows positioned at unrealistic angles. Additionally, a bear was interpreted by the AI as a strange, shaggy creature resembling a dog.

According to NBC, the researchers found that the AI model could generate images that matched the attributes of the original photos, such as color, texture, and overall theme by up to 84%. The research team believes that within the next decade, this technology could be widely available to anyone, anywhere.

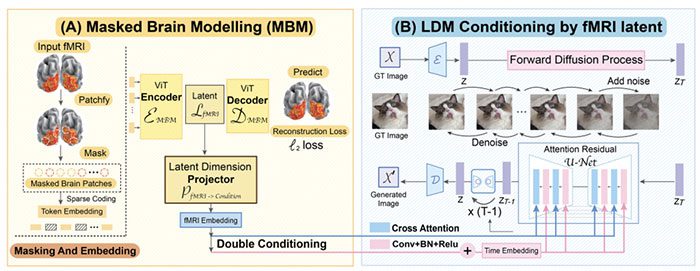

It is known that this AI model is called Mind-Vis and has been trained on a large dataset with over 160,000 brainwave scans. To produce highly accurate images as seen in the experiment, Mind-Vis underwent hours of training in the laboratory.

For the second part of the training process, this AI model will be trained on a different dataset from a smaller group of participants whose brain activity was recorded by the fMRI while displaying images.

From there, the AI model will learn how to link specific brain activities with visual characteristics such as color, shape, and texture. According to NBC, to train this AI model, the brainwave activities of each participant had to be measured for about 20 hours.

Zijiao Chen, a researcher at the National University of Singapore and one of the researchers on the project, believes that as this technology advances, it could potentially be applied in medicine, psychology, and neuroscience.

However, like many recent developments in AI, the technology of reading human brains raises ethical and legal concerns. Some experts argue that if it falls into the wrong hands, this AI model could be used for interrogation or surveillance of individuals.