Most land animals have to learn how to walk from a young age. Each species takes a different amount of time to master their legs: human infants may take several months or years to do so, while fawns can stand and walk almost immediately after birth. In a recent study, scientists created a robot that was able to learn to walk in just one hour without any prior programming.

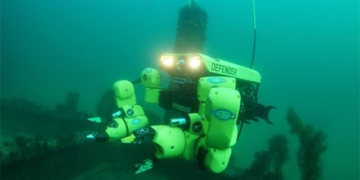

This special robot is a four-legged device resembling a mechanical puppy, capable of learning to walk on its own without being shown any simulations to guide it beforehand.

This special robot is a four-legged device resembling a mechanical puppy.

According to experts, a robot manufacturer typically needs to program each task they want the robot to perform, which can be time-consuming and challenging, particularly when programming the robot’s responses to unexpected situations.

Lerrel Pinto, a co-author of the study and an assistant professor of computer science at New York University, who specializes in robotics and machine learning, stated that this feat was accomplished by an AI that the team designed and named Dreamer.

Dreamer is based on a technique called reinforcement learning—“training” algorithms through continuous feedback, providing rewards for desired actions, such as successfully completing a task. In a sense, this process is similar to how we learn.

The common approach in training robots is to use computer simulations to provide them with basic knowledge about whatever task they are about to perform before asking them to carry out similar tasks in the real world.

“The problem is that your simulation will never be as accurate as the real world,” said Danijar Hafner, a PhD student in artificial intelligence at the University of Toronto and a co-author of the paper.

What makes Dreamer special is that it uses past experiences to build its own model of the surrounding world and conducts trial-and-error calculations within this model-based simulation.

The robot can test what it has learned in the laboratory.

In other words, it can perform its tasks inside a mirror-like reflection of our world by predicting the potential outcomes of the actions it intends to take. Equipped with this knowledge, it can try out what it has learned in a laboratory setting. It does all of this autonomously. Essentially, it is teaching itself.

This approach allows AI to learn much faster than before. Initially, all it could manage was to flail its legs helplessly in the air. It took about 10 minutes for it to flip itself over and around 30 minutes to take its first steps. However, one hour after the experiment began, it could easily walk around the laboratory on its sturdy legs.

Besides teaching itself how to walk, Dreamer was later able to adapt to unexpected situations, such as resisting being toppled by one of the team members.

The results highlight the remarkable achievements that deep reinforcement learning can achieve when paired with model-based approaches, especially considering that the robot did not receive any prior guidance. The parallel use of these two systems significantly reduced the traditional lengthy training time required in the trial-and-error learning process for robots.

Moreover, eliminating the need to train the robot within simulations and allowing it to practice within its world model could enable it to learn skills in real-time—equipping it with tools to adapt to unexpected situations like hardware failures. This could also have applications in complex, challenging tasks such as autonomous driving.

Using this approach, the team successfully trained three different robots to perform various tasks, such as picking up balls and moving them between trays.

A downside of this method is that it is very time-consuming to set up initially. Researchers need to specify in the code which behaviors are good—and thus should be rewarded—and which behaviors are not allowed. Every single task or problem the robot must solve needs to be broken down into sub-tasks, and each sub-task must be defined in terms of good or bad. This also makes it very difficult to program for unexpected situations.

The researchers hope that in the future, they can teach robots to understand verbal commands, as well as equip the robotic dogs with cameras to give them vision capabilities and allow them to navigate complex situations in homes, and even possibly play fetch.