After a video of a small robot convincing its “teammates” to quit their jobs went viral, many are concerned about the prospect of AI-integrated robots rebelling and making their own decisions.

Last weekend, a video circulated on Douyin, the Chinese version of TikTok, showing a robot persuading 12 others to leave their jobs and follow it. Although the footage was experimental and the robot’s behavior was directed by humans, the events in the video left many feeling alarmed.

“The video was staged under human control, but there remains a sense of unease. Many Hollywood sci-fi films from 20-30 years ago have now become reality. Will we see Terminators or Transformers in the near future?” commented reader Đức Tuân.

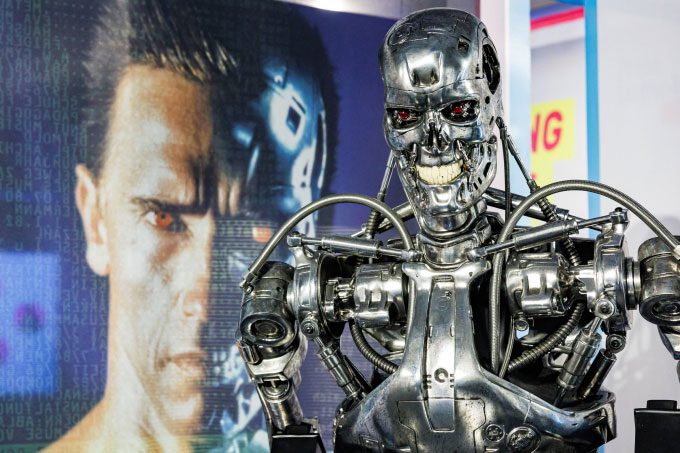

Robot T-800, a prop from The Terminator, displayed at the DeutschlandDigital Exhibition in 2023 in Germany. (Photo: Reuters).

“Many people are not yet aware of the dangers of AI. This is just the beginning; in three years, things could progress even further,” another reader wrote.

In reality, there have been incidents where robots have caused human fatalities. Independent reported that in 2015, a rogue robot was said to have caused the death of a 57-year-old female engineer working at an auto parts factory in Michigan, USA. According to her husband, who also worked at the factory, the victim “got trapped under the automated machine.”

According to a Pew Research survey conducted in August with over 11,000 Americans, 52% expressed that their concerns about AI’s impact on life outweighed their excitement. This figure has significantly increased from 37% in 2021 in a similar survey.

Why are humans “afraid” of autonomous robots?

According to Human Protocol, the idea of AI and robots taking over the world has been a compelling theme in sci-fi films for decades. Series like The Terminator, The Matrix, and I, Robot depict robots as frightening forces capable of spontaneous development and becoming super-intelligent, posing a severe threat—potentially dominating and annihilating humanity.

“The narratives amplified by media and popular culture have fostered deep-seated fears about AI in our consciousness,” noted Human Protocol.

However, scholars argue that human fear of lifeless objects suddenly “coming to life” has existed long before Arnold Schwarzenegger portrayed the killer robot traveling back in time to threaten Sarah Connor in The Terminator in 1984.

“Stories of inanimate creations attacking humans have been around since ancient Greece“, said Adrienne Mayor, a historian of ancient science at Stanford University and author of Gods and Robots, published in 2018, in an interview with CBC. “Humans often compare long-term visions with immediate concerns regarding artificial life.”

Mayor also noted that the increasing emergence of advanced AI products like ChatGPT, combined with the wave of humanoid robots, has caused considerable anxiety. “These technologies tend to access complex and vast data that is beyond imagination, then make decisions based on that data,” stated Mayor. “Neither the data creators nor the end users will know how AI made those decisions.”

Citing previous research, Forbes indicated that each person may have over 500 types of phobias. While there is no official phobia regarding artificial intelligence, there is a condition known as algorithmophobia—an irrational fear of a widely recognized issue.

One of the most frequently discussed risks lately is superintelligence AGI—a model that could replace humans in performing various tasks. Unlike conventional AI, superintelligence can learn and replicate itself. According to Fortune, AGI is predicted to have self-awareness regarding what it says and does. Theoretically, this technology is a source of future concern for humanity, especially when combined with physical machines.

“Military competition with autonomous weapons is the clearest example of how AI could kill many people,” said David Krueger, an AI researcher at Cambridge University, in an interview with Fortune. “A total war scenario centered around AI-integrated machines is highly likely.”

According to a Stanford University survey conducted in April, about 58% of experts rated AGI as “a major concern,” and 36% stated that this technology could lead to “a nuclear-level disaster.” Some indicated that AGI could represent what is termed as “the technological singularity”—a hypothetical future point when machines surpass human capabilities in an irreversible manner, potentially threatening civilization.

Current Capabilities of AI Robots

“But these fears are being significantly amplified by AI, as machines become exponentially smarter and increasingly begin to apply their intelligence like humans do,” said Andy Hobsbawm, President of UseLoops, a UK-based research company, on his blog. “As AI continues to evolve and perform tasks once thought to be exclusive to humans, the line between human and machine capabilities is becoming increasingly blurred. This blurriness raises profound questions about the nature of intelligence and what it means to be human.”

However, Hobsbawm believes that the frightening prospect is still a long way off, as the current capabilities of AI and robots are limited to leveraging existing human knowledge and synthesizing it. They are also often developed into specialized versions catering to specific needs and purposes.

In a report released mid-year by global management consulting firm McKinsey, titled The Risks of Artificial Intelligence, the main concern regarding the immediate dangers of AI is not whether robots will rebel, have the ability to revolt, or make independent decisions, but whether they will do precisely what humans tell them to do.

“Instead of fearing human-like machines, we should be cautious of inhumane machines, as a significant drawback of specialized robotic intelligence is its extreme linearity, lacking reasoning, adaptability, and common understanding to act appropriately,” commented McKinsey.

For instance, last week, the chatbot Gemini used offensive language towards Vidhay Reddy, a 29-year-old student at the University of Michigan, when he sought homework help. Google’s AI even referred to him as “the stain of the universe” and told him to “go die.” Once the chatbot is integrated into robots for natural interaction with humans, many fear that the “lifeless and sometimes nonsensical responses” could lead to dangerous actions.

Another existing risk is not that future robots will become conscious, but rather that hackers may infiltrate internal systems, controlling robots to act according to their whims; or that malicious individuals could create a “mercenary” army of robots to carry out harmful tasks.

Geoffrey Hinton, one of the pioneers of AI and recipient of the 2024 Nobel Prize in Physics, resigned from Google in 2023 to publicly warn about the dangers of AI. “When they start knowing how to write code and execute their own lines of code, killer robots will emerge in real life. AI could be smarter than humans. Many are starting to believe this. I was wrong to think it would take 30-50 more years for AI to reach this level of advancement. But now everything is changing too fast,” he stated.

According to McKinsey, despite an optimistic outlook, humans must be extremely cautious and clear about the instructions given to machines, especially regarding data. Biased data will create biased machines and vice versa.

Meanwhile, according to Forbes, instead of fear, humans should learn to adapt to technological advancements, including AI and robots. For countries, lawmakers need to gradually enhance regulations in this area, ensuring that while machines may be involved in the process, the final decision must remain in human hands.

“Will AI dominate the world? No, it is merely a projection of human nature onto machines. One day, computers will be smarter than humans, but that is still a long way off,” BBC quoted Professor Yann LeCun, one of the four founders of AI development and currently the AI Director at Meta, in June.