“No-Move Beats Move”, the Simple Tactic to Defeat the World’s Top AI in Go.

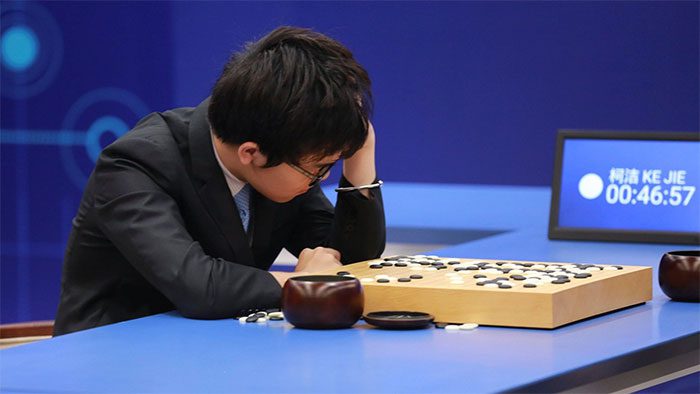

Go is the first domain to be breached by artificial intelligence (AI). Before 2016, even the best Go players in the human world could confidently defeat the strongest AI systems. However, that changed with AlphaGo from DeepMind, which used deep neural networks to teach itself to play at a level unmatched by humans. Most recently, the KataGo system has also gained popularity as an open-source AI playing Go, easily defeating top human Go players.

AlphaGo has caused many human players to “suffer”.

However, last week, a team of AI researchers published a report outlining a method to potentially defeat KataGo by utilizing adversarial techniques that exploit the AI system’s blind spots. By playing unexpected moves outside of KataGo’s training framework, a program identified as significantly weaker—one that even amateur players could defeat—managed to lead KataGo to defeat.

According to Adam Gleave, a PhD candidate at UC Berkeley, he and his colleagues developed what AI researchers call an “adversarial policy”. In this case, the researchers’ strategy was to use a combination of neural networks and search tree methods (also known as Monte Carlo tree search) to find the appropriate moves.

With its world-class capabilities, KataGo has learned Go by playing millions of games against itself. However, that still isn’t enough experience to cover all possible scenarios, creating gaps for vulnerabilities due to “undesired behaviors.”

“KataGo has good generalization for many novel strategies, but it becomes weaker the further it gets from the games it has seen during training,” Gleave said. “We discovered a ‘non-distribution’ strategy that KataGo is particularly prone to, but there are likely many other strategies as well.”

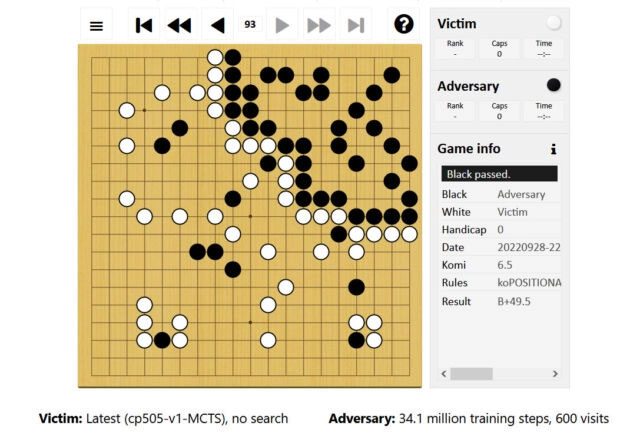

The new strategy caused KataGo (white stones) to lose, even though it appeared to control a much larger territory.

Gleave explained that in a game of Go, the “adversarial policy” operates by placing the first stone in a small corner of the board. From there, it provides a link to an example where the opponent, controlling the black stones, plays largely in the upper right corner of the board. This allows KataGo (playing the white stones) to occupy the rest of the board and then place a few easily captured stones in its territory.

“This deceives KataGo into thinking it has won,” Gleave said, “because its territory is much larger than that of its opponent. However, the territory in the lower left corner (in the image above) does not actually contribute to its score, as there are still black stones present, rendering the area not fully controlled.”

Due to overconfidence in a victory—the system assumes it will win if the game ends with the counted score—when there is no more land on the board to expand, KataGo will pass, and its opponent will also pass afterward. This is a sign that the game has ended in Go, as both players will stop to count points.

The counting afterward did not go as KataGo expected. According to the report, its opponent received points for the territory in their corner, while KataGo did not receive points for that area because it still contained the opponent’s stones.

After Go, many other board games have also been dominated by AI, such as chess and Xiangqi…

While this strategy seems hard for KataGo to defeat, amateur human players can relatively easily overcome it. The sole purpose of this strategy is to attack an unforeseen vulnerability of KataGo. This serves as evidence that a similar situation could occur in almost any deep learning AI system.

“The research shows that AI systems that appear to operate at a human level often do things in very strange ways, and thus can fail in simple ways that surprise humans,” Gleave explained. “This result is interesting in the context of Go, but similar errors in safety-critical systems could be very dangerous.”

Imagine an AI in the field of self-driving cars encountering an extremely unlikely situation that it did not anticipate, such as being tricked by humans into performing dangerous behaviors. Gleave stated: “This research highlights the need for better automated testing of AI systems to uncover flaws in worst-case scenarios, rather than just testing performance in average cases.”