The chaotic era of generative AI has made it easier than ever to “draw” human faces using software, leading to new concerns about the blending of reality and deception.

In response to this pressing issue, a group of researchers at the University of Hull has published a new study describing a method for detecting deepfake images generated by AI through the analysis of light reflections in the subject’s iris. This innovative approach employs a technique originally used by astronomers to analyze light emitted from distant galaxies.

Adejumoke Owolabi, a student pursuing a Master of Science degree at the University of Hull, is the lead author of the new scientific report, under the supervision of Professor Kevin Pimbblet, an expert in astrophysics.

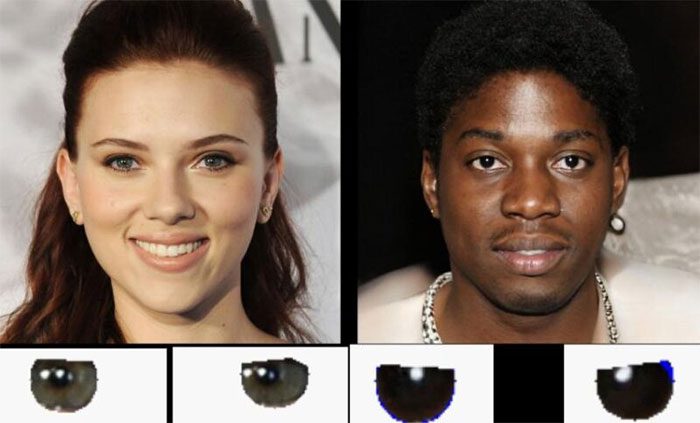

The researchers write: “In this photo, the person on the left (Scarlett Johansson) is real, while the person on the right is generated by AI. Their irises are described below their faces. The reflections in the irises are consistent for the real person, but inaccurate (from a physical perspective) for the fake one“.

The method is based on a fundamental principle: two eyes illuminated by a light source will contain similar reflected images. Many AI-generated images fail to account for the reflective properties of eyes, resulting in discrepancies in the reflections seen in synthetic images.

In fact, these differences can often be detected with the naked eye. However, utilizing tools from astronomy represents a new breakthrough in the detection of fake images.

Details of the New Research

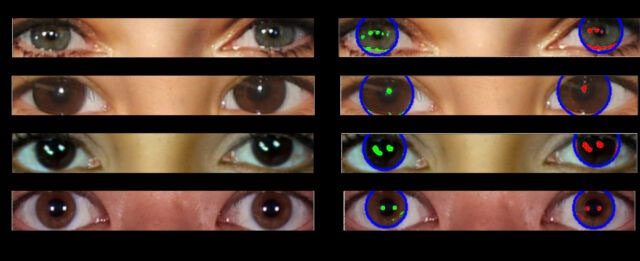

In a statement, Professor Pimbblet explained that Owolabi developed an automated tool for detecting AI-generated images. After allowing the tool to scan a dataset of real and AI-generated images, the research team identified distinct differences in the AI-generated eyes.

Specifically, the team employed a measurement method called the Gini coefficient, used to assess light distribution in galaxy images, to compare the reflected light appearing in the left and right irises.

The real eyes exhibited consistent light reflections.

“To measure the shape of galaxies, we analyze whether they are concentrated in the center, whether they are symmetrical, and assess their flatness. We analyze the distribution of light,” Professor Pimbblet compared the measurement of reflected light in the irises to typical galaxy research activities.

The team of scientists also applied the CAS parameter method (an acronym for Concentration, Asymmetry, Smoothness) to detect AI-generated images; however, this method was less accurate.

AI-generated images display inconsistent reflections.

Practical Applications

The advancements in generative AI models and tools for detecting fake images can be likened to an arms race: as new detection methods emerge, AI creators will seek ways to make eyes look more realistic. Moreover, the new method requires clear images of the eyes.

In practice, the aforementioned method can still make errors, as in photos of “real people doing real things.” The light reflections in the eyes can also differ.

To achieve higher accuracy, future tool developers may combine this method with other criteria, such as hair structure, anatomical precision, skin details, or illogical elements in the scenery.

Professor Pimbblet noted that the method holds great promise in the near future but still produces erroneous results and “cannot be entirely accurate.”

“However, this method provides us with a fundamental countermeasure in the arms race to detect deepfake images,” he added.