Scientists at the Massachusetts Institute of Technology (MIT) have achieved a groundbreaking application of algorithms to recreate a person’s portrait using only their voice.

You may have heard of AI-powered cameras that can recognize individuals by analyzing their facial features, but what if there were a way for artificial intelligence to determine what you look like solely based on the sound of your voice, without comparing it to a database?

This is exactly what a team of researchers at the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) has been investigating, and their results are impressive.

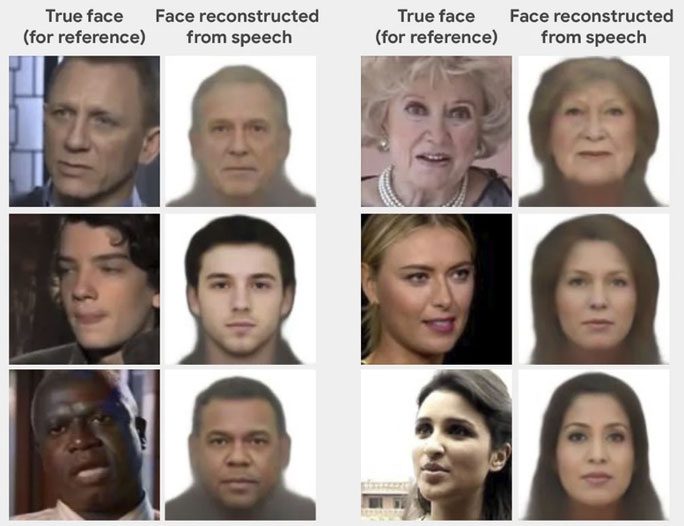

Although the AI algorithm, named Speech2Face, has not yet been able to identify the exact facial features of individuals based solely on their voice, it certainly captures many accurate details.

The Speech2Face AI algorithm was developed by scientists at MIT’s Artificial Intelligence (AI) Laboratory (CSAIL), enabling the reconstruction of a person’s face from a short voice recording, with remarkable results.

“Our model is designed to reveal the statistical correlations that exist between facial features and the speaker’s voice,” the creators of Speech2Face stated.

“The training data we used consists of a collection of educational videos from YouTube and does not represent the entire global population.”

The research team’s task is to recreate a person’s facial image from a short voice recording. (Image: Speech2Face).

Firstly, the researchers designed and trained a deep learning artificial neural network using millions of videos from YouTube and the internet while people were speaking.

During this training process, the AI learned the correlations between the sound of a voice and what the speaker looks like. These correlations allowed the AI to make educated guesses about the speaker’s age, gender, and nationality.

There was no human involvement in the training process. The AI was simply provided with a large volume of videos and tasked with identifying the correlations between vocal characteristics and facial features.

Once trained, the AI excelled at generating portraits based solely on voice recordings, closely resembling how the speaker actually looks.

Actual image of the speaker (left) and image recreated by AI from their voice (right). (Image: Speech2Face).

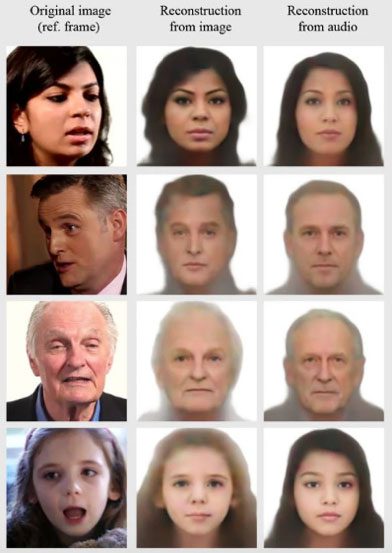

To further analyze the accuracy of the facial reconstruction, the researchers built a “face decoder.” This decoder generates a standard reconstruction of a person’s face from their still image while ignoring “irrelevant variations,” such as photo angles and lighting. This allows scientists to easily compare voice reconstructions with the actual characteristics of the speaker.

Once again, the results from the AI were remarkably close to the actual faces in many studied cases across various ages, genders, and ethnicities.

Actual image of the speaker (left), image recreated by AI from their image (middle), and image recreated by AI from their voice (right). (Image: Speech2Face)

This AI technology can generate portraits from voice, potentially creating animated images of a person on a smartphone or during a video conference when their identity is unknown and they wish to keep their actual face hidden.

The researchers wrote in a paper published at a computer vision and pattern recognition conference (CVPR): “The generated faces could also be used directly to assign voices to synthetic speech used in smart home devices and virtual assistants.”

Law enforcement agencies could also utilize AI to create a suspect’s portrait from the only evidence being a voice recording. However, government applications are sure to be the subject of much debate and controversy regarding privacy and ethics.

AI creates portraits solely from voice. (Image: Speech2Face Research Team)