Researchers from Carnegie Mellon University have developed a non-invasive brain-computer interface (BCI) that allows individuals to move objects using their thoughts.

The team at Carnegie Mellon University (USA) recently unveiled a brain-computer interface (BCI) that operates without implanting chips, powered by artificial intelligence (AI), enabling people to manipulate objects based on the movement of a target displayed on a computer screen, simply by thinking.

The researchers employed a deep neural network driven by AI to enhance accuracy and minimize noise interference during data collection. This approach offers a significant advantage over conventional non-invasive BCIs used for facial recognition, voice recognition, and various other simple tasks.

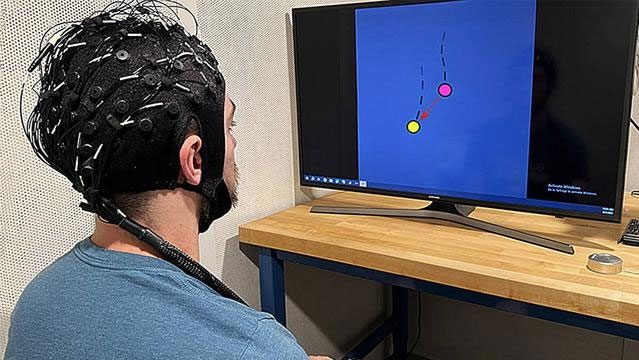

Participants in the experiment use their thoughts to move objects following the movement of a virtual target. (Photo: Carnegie Mellon University).

Deep neural networks, compared to artificial neural networks (ANN), consist of multiple hidden layers and nodes, allowing them to handle more complex tasks. This enables the BCI to extract accurate results from large and complex datasets, even when the data is noisy.

In Carnegie Mellon’s experiment, 28 participants were able to continuously use their thoughts to move an object in accordance with the target displayed on the screen.

The non-invasive BCIs were connected to the researchers’ brains. Additionally, an electroencephalogram (EEG) was utilized to record the brain activity of participants during the experiment. Data from the EEG was employed to enhance and automate the AI that operates the deep neural network.

The research team stated that the deep neural network could instantly understand the action the user wanted to perform with the moving object, merely by analyzing data from the BCI sensors.

Current research results suggest that in the future, AI-driven BCIs will enable individuals to control peripheral devices without the need for hand movements or mechanical actions.

This will simplify interaction with technology and allow researchers to observe brain activity in much greater detail, improving the lives of people with disabilities.

This is not the first time that non-invasive BCIs have demonstrated their potential. In 2019, researchers similarly used thoughts to control a robotic arm that followed a computer mouse cursor.

Before non-invasive chip technology, invasive chips were led by two neurotechnology companies, Neuralink and Synchron, spearheaded by Elon Musk and Bill Gates, followed by a series of other BCI companies researching both invasive and less invasive chips. Invasive chips are implanted directly into the brain, while less invasive chips are placed within the skull.

The use of invasive chips raises concerns about potential damage to the brain and skull during implantation, the risk of hacking, long-term health effects on the brain, and the possibility of manufacturers exploiting neural data, among numerous other worries. This is precisely where non-invasive BCIs exhibit their superior advantages.

According to the research team, non-invasive BCIs offer numerous benefits, including high safety, cost-effectiveness, and suitability for patients and the general population, in stark contrast to the technology developed by Neuralink and Synchron.

Bin He, a member of the research team and a professor of biomedical engineering at Carnegie Mellon, stated: “The team is testing the application of non-invasive BCIs for patients with reduced motor function.”

AI-driven non-invasive BCIs are believed to enhance AI devices and robotic assistants. According to Professor Bin He, “The non-invasive automated BCI technology is being tested to control robotic arms for complex tasks.”